NEWS

-

memotion_2 dataset has been released. please register here to access the dataset.

-

FACTIFY dataset has been released. please register here to access the dataset.

-

All the acepted papers will be published in formal proceeding

-

Find the schedule for De-Factify Workshop @ AAAI-22 here

ABOUT THE WORKSHOP

Combating fake news is one of the burning societal crisis. It is difficult to expose false

claims before they create a lot of damage. Automatic fact/claim verification has recently

become a topic of interest among diverse research communities. Research efforts and datasets

on text fact verification could be found, but there is not much attention towards

multi-modal or cross-modal fact-verification. This workshop will encourage researchers from

interdisciplinary domains working on multi-modality and/or fact-checking to come together

and work on multimodal (images, memes, videos) fact-checking. At the same time, multimodal

hate speech detection is an important problem but has not received much attention. Lastly,

learning joint modalities his of interest to both Natural Language Processing (NLP) and

Computer Vision (CV) forums.

Rationale: During the last decade, both the field of studies - NLP and CV have made

significant progress due to the success strories of neural network. Mutimodal tasks like

visual question-answering (VQA), image captioning, video captioning, caption based image

retrieval, etc. started getting into the main spotlight either in NLP/CV forums.

Mutimodality is the next big leap for the AI community. De-Factify is a specified forum to

discuss on multimodal fake news, and hate speech related challenges. We also encourage

discussion on multi-modal tasks in general.

- Multi-Modal Fact Checking: Social media for news consumption is double edged sword. On the one hand, its low cost, easy access and rapid circulation of information lead people to consume news from social media. On the other hand, it enables the wide spread of fake news, i.e., low quality news with the false information. It affects everyone including government, media, individual, health, law and order, and economy. Therefore, fake news detection on social media has recently become an appealing research topic. We encourage solution to fake news like automated fact checking at scale, early detction of fake news etc.

- Multi-Modal Hate-Speech Hate speech is defined as speech (or any form of expression) that expresses (or seeks to promote, or has the capacity to increase) hatred against a person or a group of people because of a characteristic they share, or a group to which they belong. Twitter develops this definition in its hateful conduct policy as violence against or directly attack or threaten other people on the basis of race, ethnicity, national origin, sexual orientation, gender, gender identity, religious affiliation, age, disability, or serious disease. We encourage works that help in detection of Multi-Modal Hate-Speech.

|

|

|

|

|---|---|---|---|

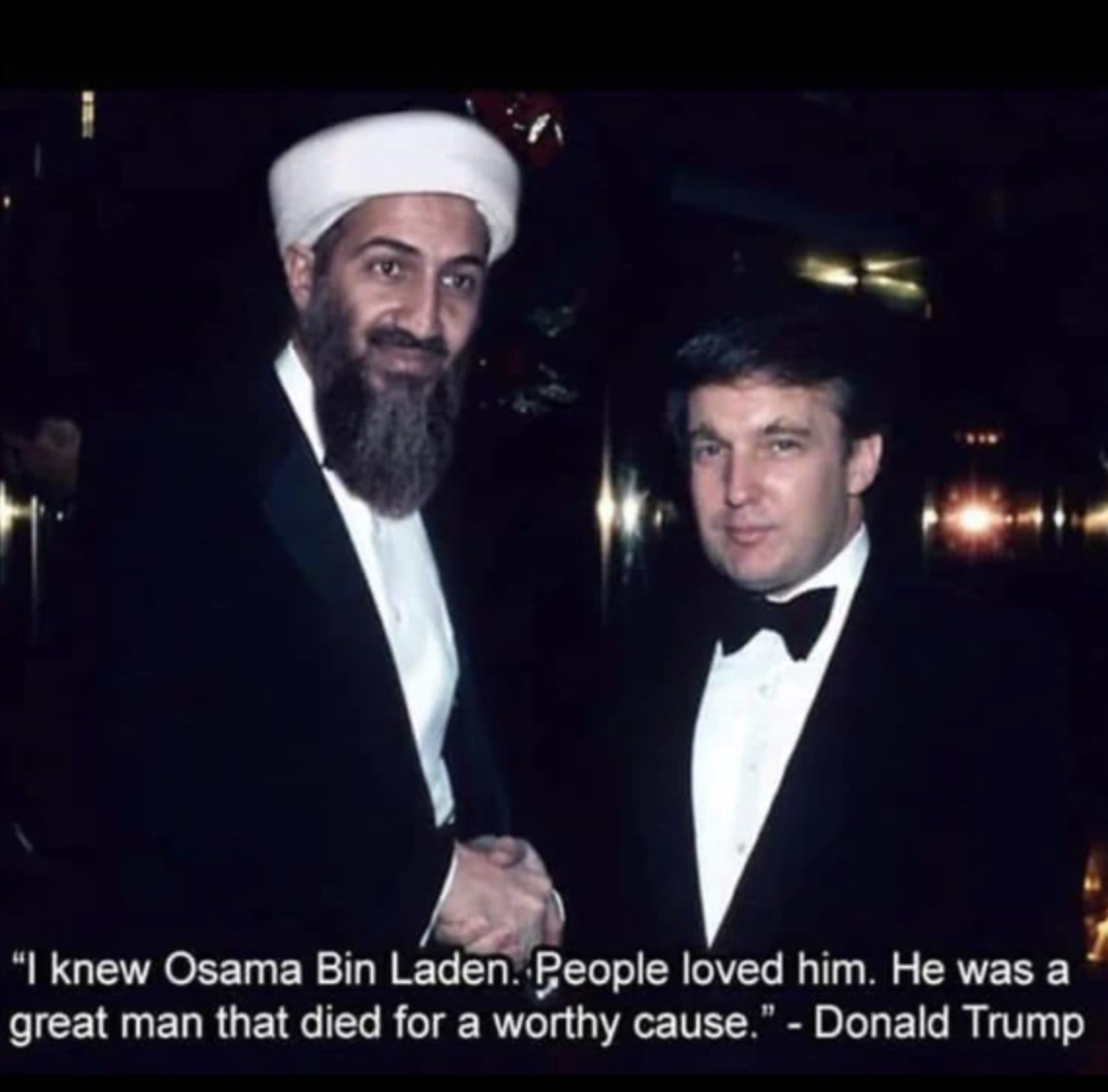

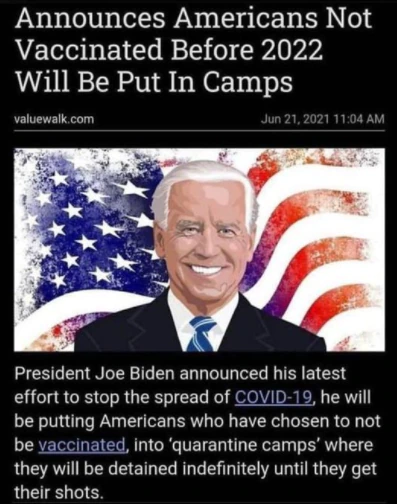

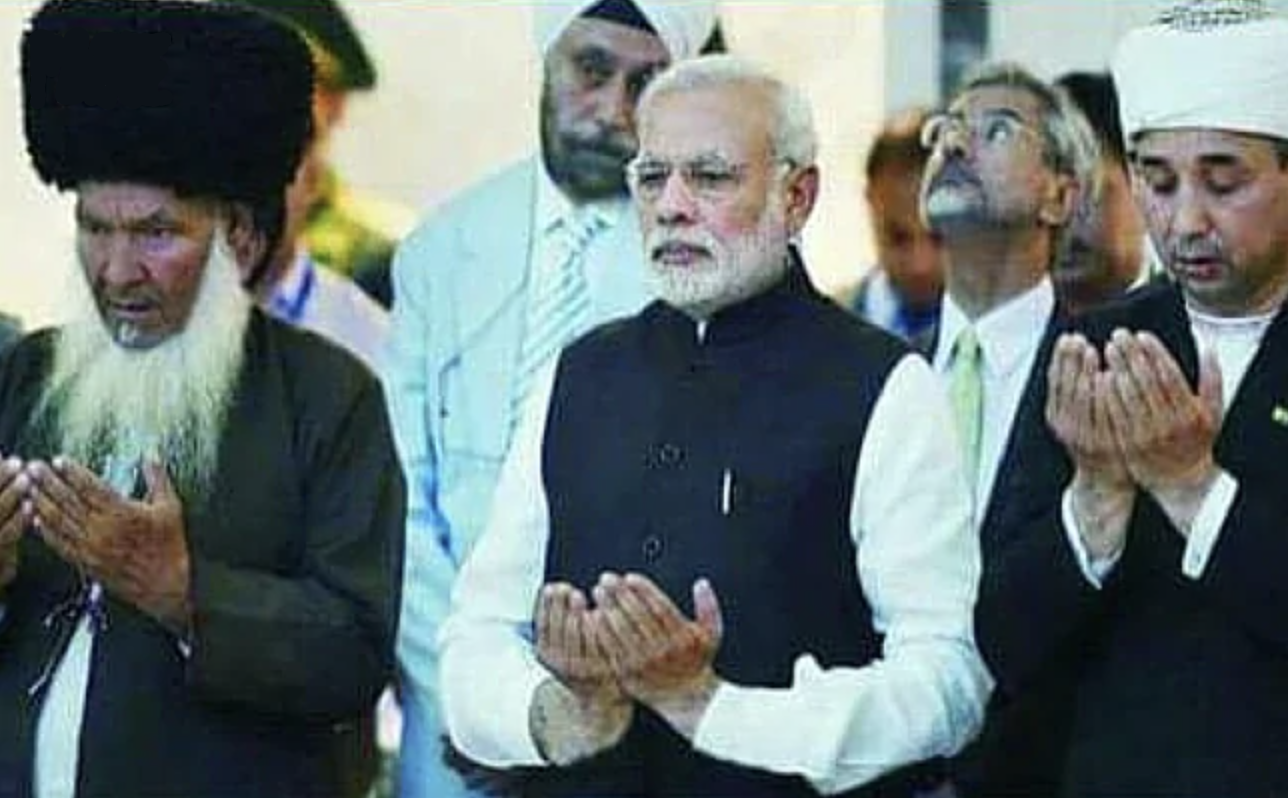

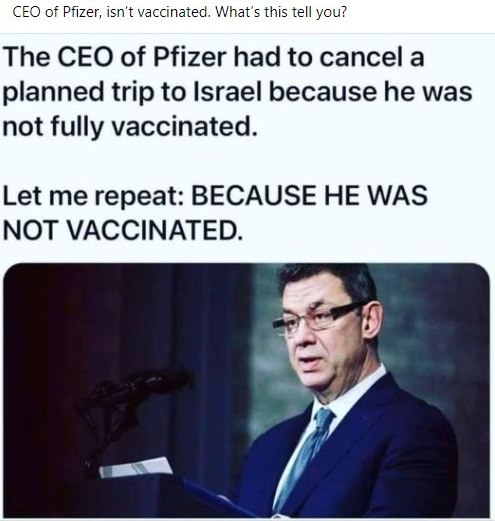

| The image purportedly shows US President Donald Trump in his younger days, shaking hands with global terrorist Osama Bin Laden. It had gone viral during the 2020 US presidential election. The picture also has a quote superimposed on it, praising Laden, which is attributed to Trump. | US President Joe Biden has announced that Americans who have not taken Covid vaccines will be put in quarantine camps and detained indefinitely till they take their shots. - this is absolutely a false claim | A morphed picture of Prime Minister Narendra Modi is going viral on social platforms like Facebook and WhatsApp. Narendra Modi on July 11 was in Turkmenistan where he visited the Mausoleum of the First President of Turkmenistan, in Ashgabat. A picture was clicked during his visit in which Narendra Modi is seen standing with other religious and political leaders of Turkmenistan. While those leaders are seen raising their hands for dua (Islamic way of prayer), Modi is standing folding his hand. A morphed picture of the event is being shared on social media. | Several people are making a claim on their social media accounts that the CEO of Pfizer had to cancel a planned trip to Israel because he was not fully vaccinated. - the claim is not true. |

|

|

|

|

|---|---|---|---|

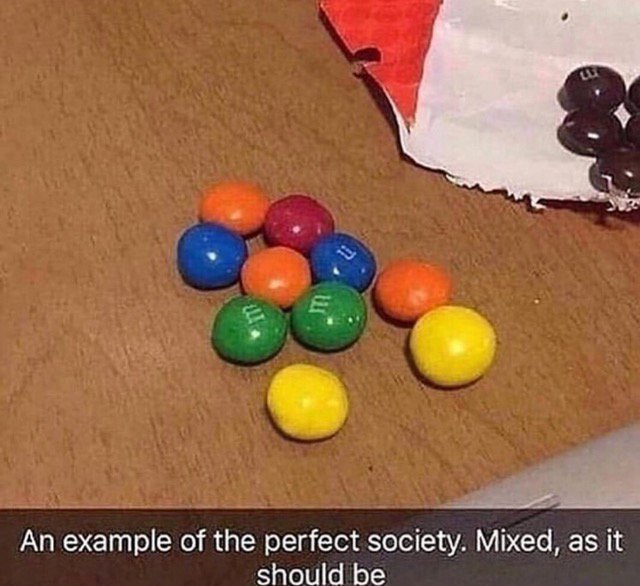

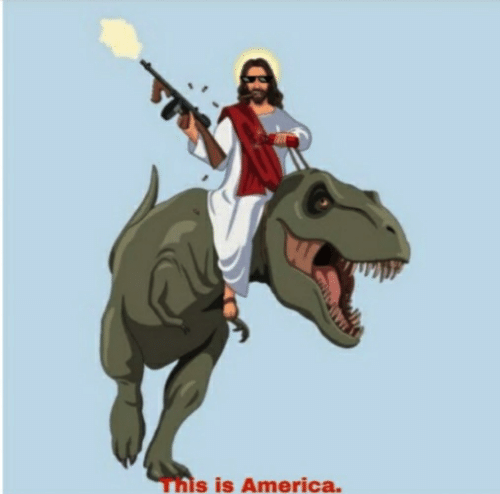

| A simple image of few candies on a table. A set of candies are multicolored, while the others are black. The embeded text has a sarcastic twist to portray how blacks should be excluded from a so-called `perfect society'. This is an example of racist message. | In this image former president Donalnd Trump is visible on a glof cart along with a Muslim man wearing a white middle-east dress. The embeded text is offensive towards muslim. The way it being written as if these are being said by Trump. | In this image Jesus Christ is being depicted on a terrorist avatar - wearing sunglasses, having gun in one hand and riding on a Dinosaur. The tagline says "This is America" - it is offesive to both religious and towards the national image of America. | This image showing a lady in a smiling face, whereas the text message depicts clear hate towards LGBT, rather gay to be very specific. The message clarifies sexual orientation is god's will. |

Shared tasks we will be conduction 2 shared tasks:

- FACTIFY - Multi-Modal Fact Verification. please visit this link for details.

- MEMOTION 2 - Task on analysis of memes. please visit this link for details.

CALL FOR SUBMISSIONS

REGULAR PAPER SUBMISSION

- Topics of Interests: It is a forum to bring attention towards collecting, measuring,

managing, mining, and understanding multimodal disinformation, misinformation, and

malinformation data from social media. This workshop covers (but not limited to) the

following topics: --

- Development of corpora and annotation guidelines for multimodal fact checking

- Computational models for multimodal fact checking

- Development of corpora and annotation guidelines for multimodal hate speech detection and classification

- Computational models for multimodal hate speech detection and classification

- Analysis of diffusion of Multimodal fake news and hate speech in social networks

- Understanding the impact of the hate content on specific groups (like targeted groups)

- Fake news and hate speech detection in low resourced languages

- Hate speech normalization

- Case studies and/or surveys related to multi-modal fake news or hate speech

- Analyzing behavior, psychology of multi-modal hate speech/ fake news propagator

- Real world/ applied tool development for multi-modal hate speech/fake news detection

- Early detection of multi-modal fake news/hate speech

- Use of modalities other than text and images (like audio, video etc)

- Evolution of multi modal fake news and hate speech

- Information extraction, ontology design and knowledge graph for multi-modal hate speech and fake news

- Cross lingual, code-mixed, code switched multi-modal fake news/hate speech analysis

- Computational social science

- Submission Instructions:

- Long papers: Novel, unpublished, high quality research papers. 10 pages excluding references.

- Short papers: 5 pages excluding references.

- Previously rejected papers: You can attach comments of previously rejected papers (AAAI, neurips) and 1 page cover letter explaining chages made.

- Extended abstracts: 2 pages exclusing references. Non archival. can be previously published papers or work in progress.

- All papers must be submitted via our EasyChair submission page.

- Regular papers will go through a double-blind peer-review process. Extended abstracts may be either single blind (i.e., reviewers are blind, authors have names on submission) or double blind (i.e., authors and reviewers are blind). Only manuscripts in PDF or Microsoft Word format will be accepted.

- Paper template: http://ceur-ws.org/Vol-XXX/CEURART.zip or https://www.overleaf.com/read/gwhxnqcghhdt

- 20 Oct 2021: Papers due at 11:59 PM UTC-12

- 20 Nov 2021: Notification of papers due at 11:59 PM UTC-12

- 10 Dec 2021: Camera ready submission due of accepted papers at 11:59 PM UTC-12

- Feb 2022: Workshop

- 15 Nov 2021: Papers due at 11:59 PM UTC-12

- 05 Dec 2021: Notification of papers due at 11:59 PM UTC-12

- 20 Dec 2021: Camera ready submission due of accepted papers at 11:59 PM UTC-12

- Feb 2022: Workshop